styleGAN—network.py

源码

以下为network.py中Network类run方法的最后一部分。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52class Network:

def run(self,

*in_arrays: Tuple[Union[np.ndarray, None], ...],

input_transform: dict = None,

output_transform: dict = None,

return_as_list: bool = False,

print_progress: bool = False,

minibatch_size: int = None,

num_gpus: int = 1,

assume_frozen: bool = False,

**dynamic_kwargs) -> Union[np.ndarray, Tuple[np.ndarray, ...], List[np.ndarray]]:

assert len(in_arrays) == self.num_inputs

assert not all(arr is None for arr in in_arrays)

assert input_transform is None or util.is_top_level_function(input_transform["func"])

assert output_transform is None or util.is_top_level_function(output_transform["func"])

output_transform, dynamic_kwargs = _handle_legacy_output_transforms(output_transform, dynamic_kwargs)

num_items = in_arrays[0].shape[0]

if minibatch_size is None:

minibatch_size = num_items

# Construct unique hash key from all arguments that affect the TensorFlow graph.

略

# Build graph.

略

# Run minibatches.

in_expr, out_expr = self._run_cache[key]

out_arrays = [np.empty([num_items] + tfutil.shape_to_list(expr.shape)[1:], expr.dtype.name) for expr in out_expr]

for mb_begin in range(0, num_items, minibatch_size):

if print_progress:

print("\r%d / %d" % (mb_begin, num_items), end="")

mb_end = min(mb_begin + minibatch_size, num_items)

mb_num = mb_end - mb_begin

mb_in = [src[mb_begin : mb_end] if src is not None else np.zeros([mb_num] + shape[1:]) for src, shape in zip(in_arrays, self.input_shapes)]

mb_out = tf.get_default_session().run(out_expr, dict(zip(in_expr, mb_in)))

for dst, src in zip(out_arrays, mb_out):

dst[mb_begin: mb_end] = src

# Done.

if print_progress:

print("\r%d / %d" % (num_items, num_items))

if not return_as_list:

out_arrays = out_arrays[0] if len(out_arrays) == 1 else tuple(out_arrays)

return out_arrays

##依赖函数

def shape_to_list(shape: Iterable[tf.Dimension]) -> List[Union[int, None]]: #位于dnnlib/tflib

"""将TFshape转换为列表."""

return [dim.value for dim in shape]

Run minibatches

in_expr, out_expr是两个list,由于out_arrays是以out_expr为模板创建的,所以它的shape为[1, 1024, 1024, 3]:1

2

3

4

5

6

7

8

9

10

11# 运行pretrained.py时得到以下结果

print(in_expr)

[<tf.Tensor 'Gs/_Run/latents_in:0' shape=<unknown> dtype=float32>, <tf.Tensor 'Gs/_Run/labels_in:0' shape=<unknown> dtype=float32>]

print(out_expr)

[<tf.Tensor 'Gs/_Run/concat:0' shape=(?, 1024, 1024, 3) dtype=uint8>]

print(tfutil.shape_to_list(expr.shape)[1:])

[1024, 1024, 3]

print(num_items)

1

print([num_items] + tfutil.shape_to_list(expr.shape)[1:])

[1, 1024, 1024, 3]

对Graph中数据流以及作者所定义的scope感兴趣的可以进行以下操作(生成的graph非常清晰,可读性很强,值得学习。)1

2

3

4

5

6

7

8

9"""

输出默认Grpah中op以及变量、Tensor等的名字(一般由name_scope或者variable_scope定义)到txt文件;输出Graph到Tensorboard,在终端

中使用“tensorboard --logdir ./logpath”打开。

"""

with open('./graph_names.txt','w') as f:

sep='\n'

test_names = [n.name for n in tf.get_default_graph().as_graph_def().node]

f.write(sep.join(test_names))

summary_writer = tf.summary.FileWriter("log",tf.get_default_graph())

接下来的for循环中,minibatch为run接收的一个参数,若其为None,则minibatch_size = num_items,而num_items = in_arrays[0].shape[0],所以当minibatch为None时。pretrained.py执行过程中,这一for循环只执行一次,并且mb_end和mb_num均为1,所以mb_in是一个包含(1,512)和(1,0)两个np.darray的list,实质上mb_in[0]==in_arrays[0](可用代码(a==b).all()判断两个数组是否完全相同)。mb_out = tf.get_default_session().run()为真正的预测过程,mb_out即为预测结果。接着,将src(mb_out)中的数据复制到dst中。

最后判断是否需要将输出以list的形式返回。至此,run函数成功返回,实质上整个run中最关键的一句是tf.get_default_session().run(),它获取了默认会话的op和数据流,并将in_expr和mb_infeed给网络。

注:tf.Tensor的尺寸可以通过for dim in tf.Tensor.shape: print(dim.value)来获取,batches的?实际上为None。

get_output_for

get_output_for为类Network中的另一个方法1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41def get_output_for(self, *in_expr: TfExpression, return_as_list: bool = False, **dynamic_kwargs) -> Union[TfExpression, List[TfExpression]]:

"""Construct TensorFlow expression(s) for the output(s) of this network, given the input expression(s)."""

assert len(in_expr) == self.num_inputs

assert not all(expr is None for expr in in_expr)

# Finalize build func kwargs.

build_kwargs = dict(self.static_kwargs)

build_kwargs.update(dynamic_kwargs)

build_kwargs["is_template_graph"] = False

build_kwargs["components"] = self.components

# Build TensorFlow graph to evaluate the network.

with tfutil.absolute_variable_scope(self.scope, reuse=True), tf.name_scope(self.name):

assert tf.get_variable_scope().name == self.scope

valid_inputs = [expr for expr in in_expr if expr is not None]

final_inputs = []

for expr, name, shape in zip(in_expr, self.input_names, self.input_shapes):

if expr is not None:

expr = tf.identity(expr, name=name)

else:

expr = tf.zeros([tf.shape(valid_inputs[0])[0]] + shape[1:], name=name)

final_inputs.append(expr)

out_expr = self._build_func(*final_inputs, **build_kwargs)

# Propagate input shapes back to the user-specified expressions.

for expr, final in zip(in_expr, final_inputs):

if isinstance(expr, tf.Tensor):

expr.set_shape(final.shape)

# Express outputs in the desired format.

assert tfutil.is_tf_expression(out_expr) or isinstance(out_expr, tuple)

if return_as_list:

out_expr = [out_expr] if tfutil.is_tf_expression(out_expr) else list(out_expr)

return out_expr

## 以上函数涉及到的其它模块

TfExpression = Union[tf.Tensor, tf.Variable, tf.Operation]

def is_tf_expression(x: Any) -> bool:

return isinstance(x, (tf.Tensor, tf.Variable, tf.Operation))

def absolute_variable_scope(scope: str, **kwargs) -> tf.variable_scope:

return tf.variable_scope(tf.VariableScope(name=scope, **kwargs), auxiliary_name_scope=False)TfExpression被定义为有效的TF表达,它是一个typing.Union,其中包括了tf.Tensor, tf.Variable, tf.Operation。然后我们看一下字典的update方法:

1 | a = {'1': 11,'2': 22,'3': 33,} |

关于scope的使用可以参考tensorflow之scope使用,它能够控制变量名称的作用域,并且实现变量的重用,在神经网络比较复杂的情况下应用能够使Tensorboard绘制的graph更清晰,便于我们进行debug。

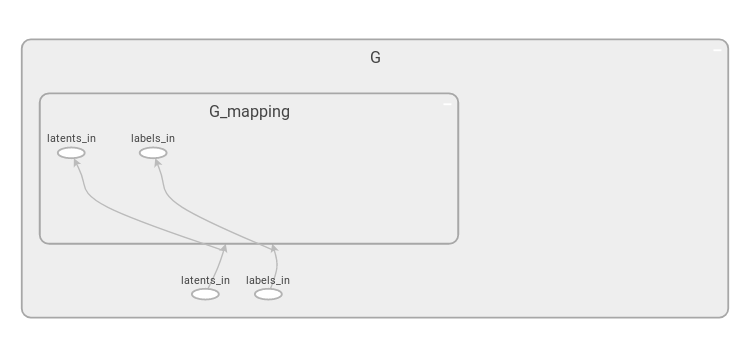

首先, assert语句获取了当前的变量作用域并判断其是否与self.scope相同,然后从in_expr中取出不为None的值expr作为有效输入存储在列表valid_inputs中。接下来改变了变量作用域,这里使用的tf.identity非常重要,它将变量、节点和graph流程计算联系在一起;运行pretrained.py时,第一次调用get_output_for会将G/latents_in和G/labels_in映射到G/G_mapping/latents_in和G/G_mapping/labels_in,即改变了变量的作用域。另外,由于设置了reuse=True,所以valid_inputs会重用in_expr变量。我们通过一个简单的示例来看一下这些操作所产生的效果:

发生了什么

1 | ## 仿照源码生成相同的scope和变量 |

tf.identity

1 | """ |

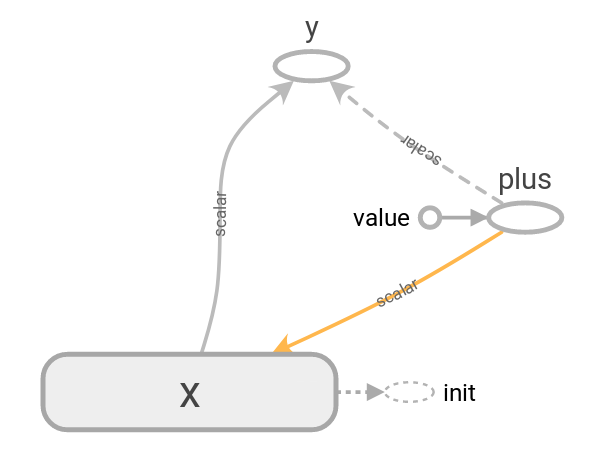

以上函数中定义了一个作用在x上的op,它能够实现对变量plus和x的自增运算,tf.control_dependencies([plus])语境中的代码会在plus执行之后(即自增运算后)才执行,也就是sess.run(y)的运行会依赖于plus。

_build_func

这里的_build_func即为G_mapping,G_synthesis或者G_style函数,源码位于training/networks_stylegan.py中,数据流动如下所示:set_shape可以改变placeholder的尺寸:

1 | test = tf.placeholder(float, shape = unknown_shape(), name = "Test") |

expr.set_shape(final.shape)即将输入的没有形状(unknown)的张量设置为期望形状[final.shape for final in final_inputs]。

Return

return out_expr返回生成的高分辨率图像。